Different orders coexist

Data laundry

An image in conversation

Imagine a picture

Viewed through the lens of an algorithm

The divisible present

Shared objects

To consider an algorithm as an interlocutor implies an intense negotiation. Indeed for a computer program, a text, an image, a blank screen are all represented at the lowest level as zeros and ones. By using file formats, we give the programs a taxonomy of objects with which it can interact. A text format will allow the processing of lines, verbs and expressions, while an image format allows the processing of colors, contours, shapes. On Erkki’s hard drive, we found many files saved in “historical” formats and regularly transformed and exported. Through the various transformations, the taxonomy of the file changes. A text can behave as an image or an image can masquerade as a text.

The file format is not an arbitrary organization of data coming from a purely speculative view of the world. A file format is usually created and used because it is adapted to certain devices and to certain usage. When we delegate our vision to a series of algorithms and programs, we begin to realize the complexity of the connections that bind the format together, the device and the use.

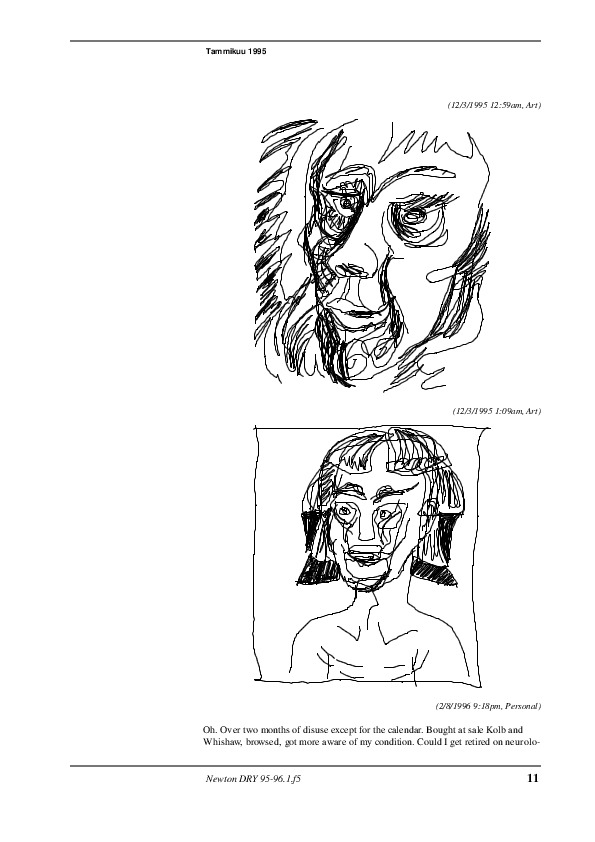

In 1993, Apple released the Newton MessagePad, the first so-called “Personal Digital Assistant”. A kind of forerunner to today’s smart/i phones, the device worked as a kind of virtual notepad, allowing free-form entry of both texts and drawings mixed together on the same virtual page. The user wrote with a plastic pen-like stylus, the movements digitized and converted into “electronic ink” and each note stamped with a date and time. The devices handwriting recognition software converted the user’s writing into text on-the-fly, a famously buggy process that became (to Apple’s chagrin) synonymous with the product. However, with some practice and patience, the device was said to “learn” from its user, and (more effectively) a compliant user could adapt his or her own writing to the device. Erkki was perfect for this kind of digital symbiosis and gave himself over fully to the machine.

In 1993, Apple released the Newton MessagePad, the first so-called “Personal Digital Assistant”. A kind of forerunner to today’s smart/i phones, the device worked as a kind of virtual notepad, allowing free-form entry of both texts and drawings mixed together on the same virtual page. The user wrote with a plastic pen-like stylus, the movements digitized and converted into “electronic ink” and each note stamped with a date and time. The devices handwriting recognition software converted the user’s writing into text on-the-fly, a famously buggy process that became (to Apple’s chagrin) synonymous with the product. However, with some practice and patience, the device was said to “learn” from its user, and (more effectively) a compliant user could adapt his or her own writing to the device. Erkki was perfect for this kind of digital symbiosis and gave himself over fully to the machine.

2/24/94 11:45 pm

Home again. One glass and one attempt to install Newton software on Mac.

Tammikuu 1994

Oh shit, the software is on high density floppies. Which means I shall instead take Newton to bed with me to see if it fulfils the basic need, why I bought it in the first place..”

5/9/94 8:26 pm

Good that I masturbated already. I must take the particle sheets and consider each case separately again. Have thought about calling the cannabis supply. A fax went, I hope, to Mr Fantini. The beard is somewhat unpleasant. She closed the far side kitchen door. Newton seems to replace smoking. I can imagine a backlit colour Newton. Maybe a short walk after they leave. Both have pissed now. The blonde …

Today I intend to buy a bottle of wine. Very slowly I am beginning to understand the page layout logic of Newton.

Between 1994 and 1996 Kurenniemi wrote copious notes on the Newton. Between business meetings, on trains, in bed at night, Kurenniemi pushed the portability of the device to the edge, despite its somewhat ungainly size and lack of backlighting (he talks of needing a new bedside lamp to help him to note at night). Clearly the device excited Kurenniemi and his use is at least in part driven by this excitement. The messages are short, typically written in between his other activities in the day and often used to comment upon them.

Kurenniemi’s Newton notes reminds the reader of Kurenniemi’s prescience. Not just regarding surface details (like his anticipation of a backlit color screen which or course maps neatly to todays “retinal” smartphone displays), but also in terms of his use of the device, literally taking it to bed with him, and its ritual use, like cigarettes, to compulsively document things with short notes that mix personal and public spheres. The texts have a clear relation with today’s “tweets” or micro-blogging practices.

It’s also striking how the Newton feeds Kurenniemi’s compulsions; the use of the Newton is itself a kind of “new drug” (replacement to smoking) in addition to a tool to procure more substances (he’s using the Newton’s capacity for sending fax documents to arrange some cannabis delivery). At times, the line between substance and software, device and user blurs: “Newton had recognition problems, hangover I must have changed settings last night. Now a few more pages.”

Today the Newton device is part of computer history. If we want to access the data, read the text, contemplate the images we can’t work directly with the format it was originally saved into. We need to work on files transferred into more contemporary formats. The exports that are on the hard drive are formatted as pdf. One of the particularities of the pdf format is that it can hold together texts and bitmap images well as vector graphics. To give the same experience to a human reader as one could have had from reading from the device, the pdf format is the format of choice. Perhaps Erkki was drawn to it as it used the same gestures as to draw/sketch and write. The device had to guess from his handwriting what glyph he was drawing on the screen. For him drawing or writing was all about tracing. The pdf preserves this continuity between the different forms of inputs. (The device would not force the user to type on keys for writing and switch to the stylus for the graphics.)

To access these elements with a computer algorithm we need to access all this information. An obvious starting point would be to try to query the textual entries of the logbook. For this we need to perform a series of operations that turn the content of the pdf into an object that a program can interpret as text and therefore query. The Linux system offers a series of utilities that once chained together allow to perform the necessary operations for the textification of the Newton’s output.

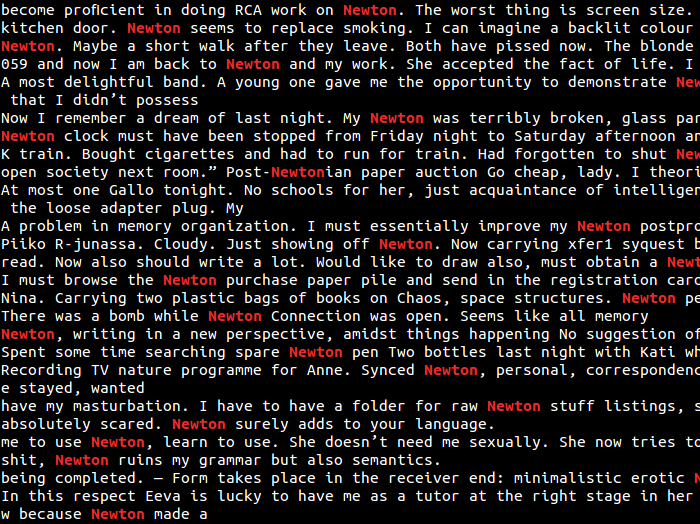

Grep is one of the basic “command-line” tools provided in GNU/Linux; one “pipes” a file into it with some textual pattern to search for something and the program outputs only those lines containing the text to the screen, highlighting in color the matches. The following figure shows how we use the tool on a text dump (itself obtained by another utility that extracts just the text content from the pdf), and search for the term “Newton”:

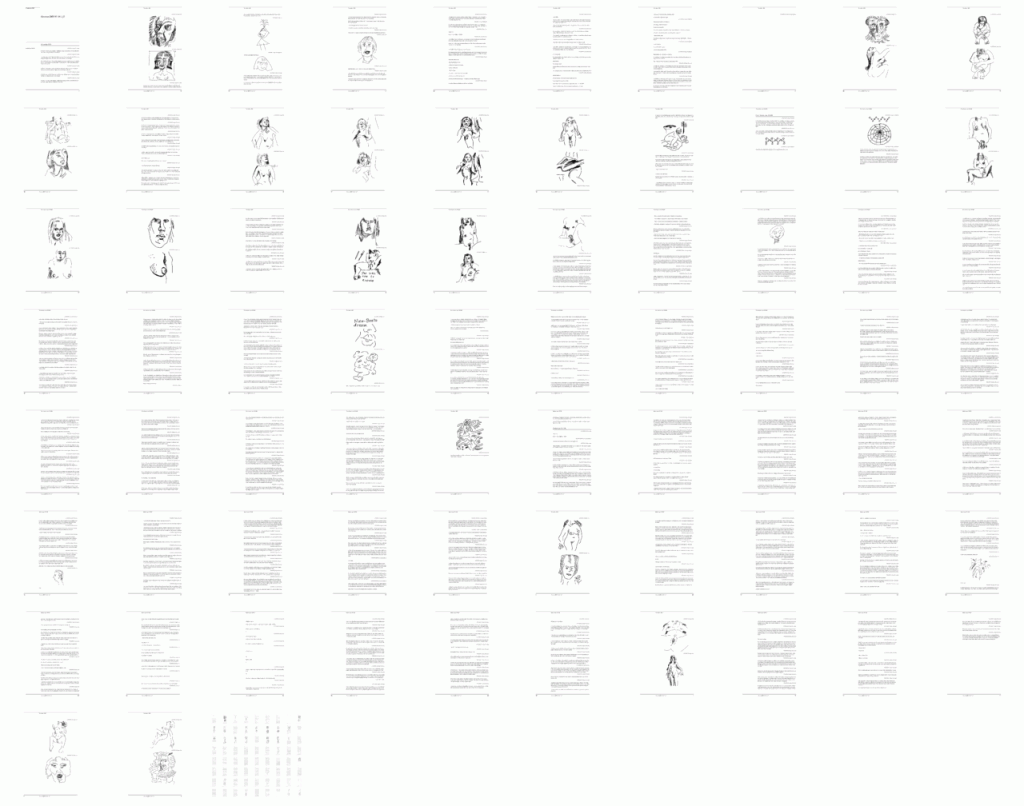

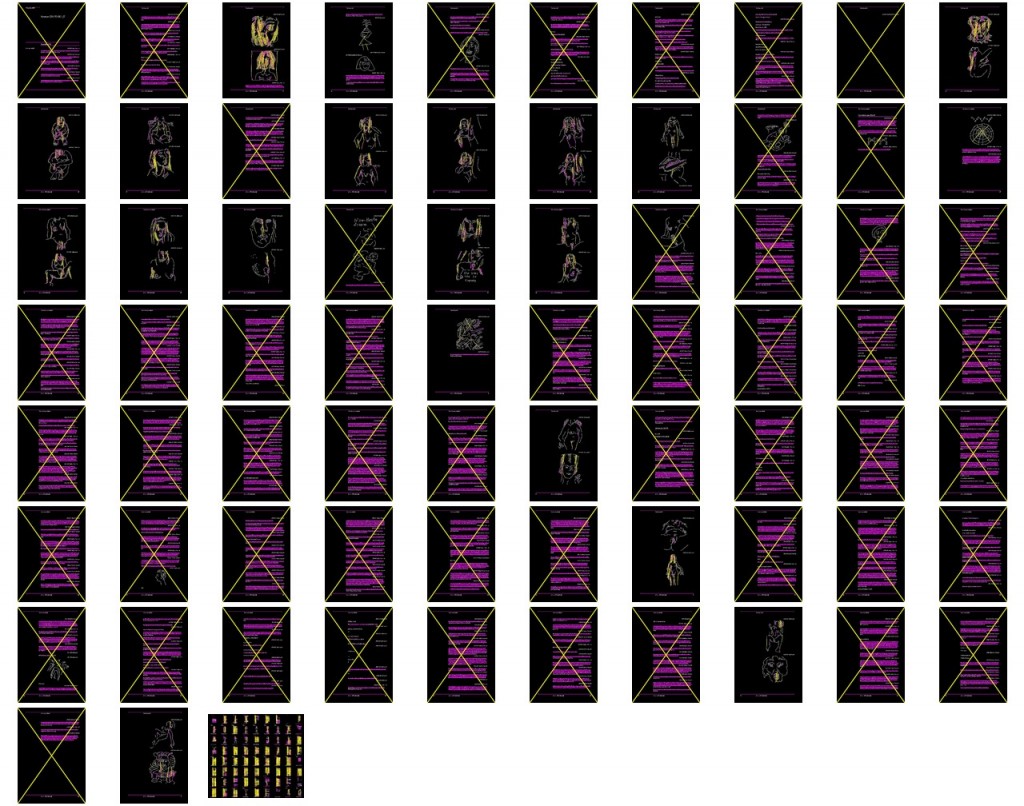

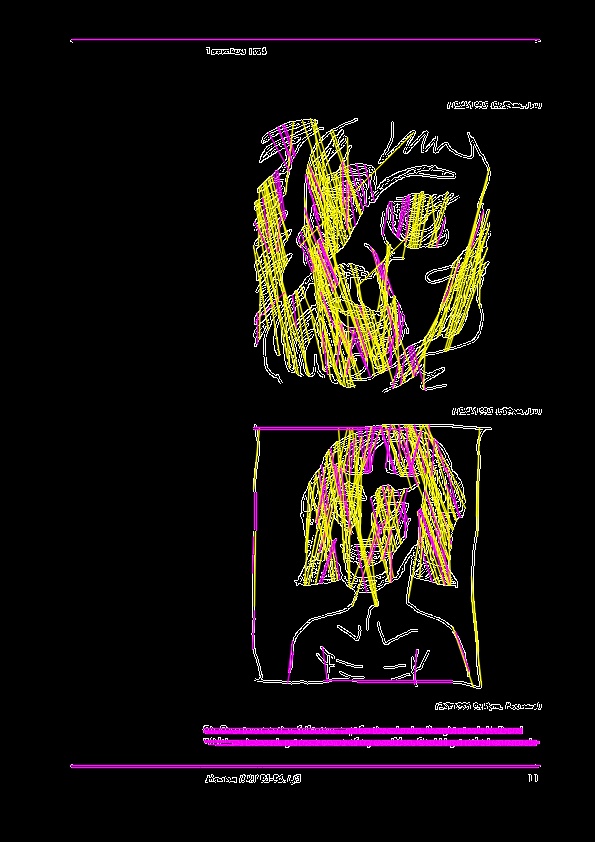

When we want to access the sketches embedded in the same files, we face a harder problem. Indeed for our computer vision algorithms to analyze/read an image, these must be exported in a format that it can understand as an image. But, if there are libraries that dump the text out of a pdf, we couldn’t find any that would reliably extract the vectors to transform them in a bitmap format suited for our computer vision tasks. We had to follow another approach. As it was impossible to isolate the vectors alone, we decided to export the whole pdf as an image. The question was now how to isolate the regions in these images where the vector drawings were located from the parts where the text was reproduced.

We begun to watch the files we produced in the company of Opencv. Opencv doesn’t properly read text, but scans/computes bitmap images. We needed to come up with a working definition in visual terms of what constiutes a vector image in the context of our document set. The test we wanted to submit the images to was the following: if there is a certain amount of horizontal lines in the image, therefore the zones where they are concentrated contain text, and when there is a significant amount of non-horizontal lines, there is a strong chance that we have located a the representation of a sketch. Through the intermediary of the Opencv library we could borrow the concept of a line as defined by Paul Hough. We used a function, cvHoughLines2, that allows to track optimally the different lines present in an image. This function is named after a patent introduced in 1962 by Paul Hough, and later refined, improved and transformed several times before having the form we know today in computer vision.

“In automated analysis of digital images, a subproblem often arises of detecting simple shapes, such as straight lines, circles or ellipses. In many cases an edge detector can be used as a pre-processing stage to obtain image points or image pixels that are on the desired curve in the image space. Due to imperfections in either the image data or the edge detector, however, there may be missing points or pixels on the desired curves as well as spatial deviations between the ideal line/circle/ellipse and the noisy edge points as they are obtained from the edge detector. For these reasons, it is often non-trivial to group the extracted edge features to an appropriate set of lines, circles or ellipses. The purpose of the Hough transform is to address this problem by making it possible to perform groupings of edge points into object candidates by performing an explicit voting procedure over a set of parameterized image objects (Shapiro and Stockman, 304).”1

The presence of non-horizontal lines give us a hint to select the pages with images.

Opencv traces the lines detected over the image. Non horizontal lines are detected when there is a drawing.

In the last figure, the pages that only contain horizontal lines are discarded.

This long example of our itinerary through the Newton logbook gives an idea of the long dialogue that happens between different instances and algorithms when we want to delegate our vision to a computer program. Along the way, what is considered an image or a text is recoded and redefined many times. Depending on the question we want to ask, a text can be a series of glyphs detected from handwriting, a character stream that can be parsed for the presence of a word or a horizontal line. An image can be a series of vectors captured from the movement of the stylus, an information hard to access embedded deep down in the pdf format or a region of a bitmap that contains a significant amount of non-horizontal lines.

We realize how the definition of an image is becoming very dependent on the definition of data, format, on the configuration of the file at the bit level. The questions we ask about the images are framed and influenced by these possible definitions. But what we are learning here would be too limited if we couldn’t connect it to other dimensions. The focus in detail on the pixel organization, the embedded metadata, the internal redefinition of the image taxonomy are only of interest of they are connected back to the practices, social interactions and utopias in which these images are integrated.

1. See Wikipedia, http://en.wikipedia.org/wiki/Hough_transform